|

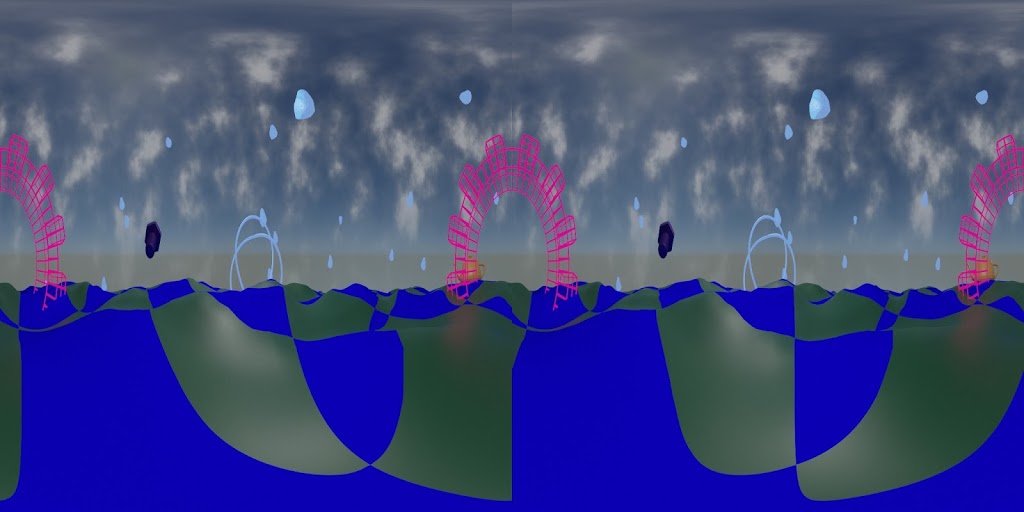

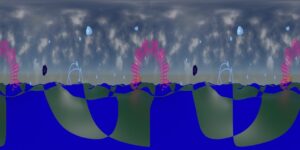

| 3D stereoscopic test frame, SBS. |

Tag: 360

A stitch in time (or space)

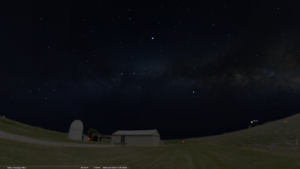

Since the planetarium will be operating online for the foreseeable future, I’ve been working on ways to give everything a nice local touch.

One way has been to make custom panoramas for use with Stellarium.

|

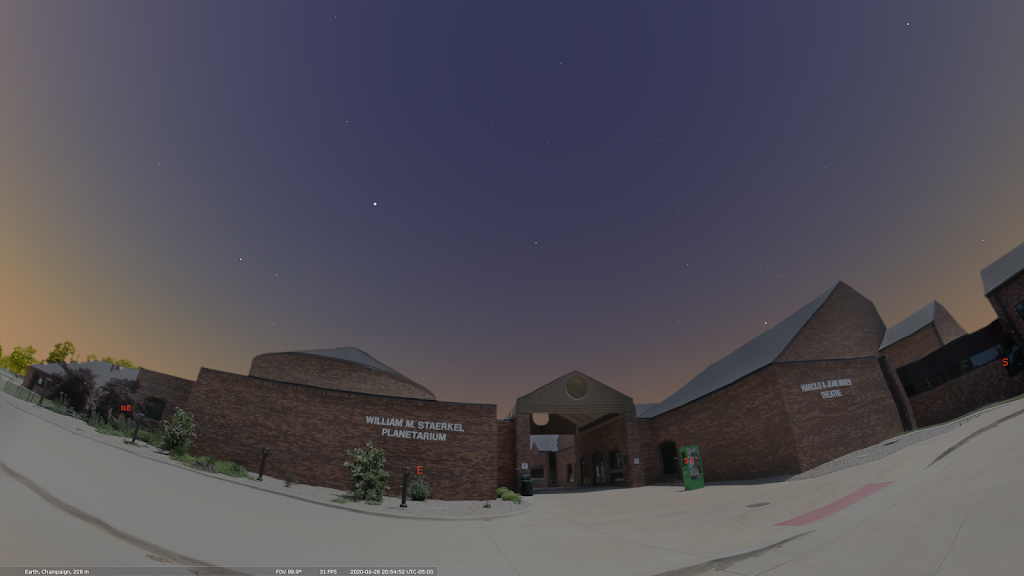

| William M. Staerkel Planetarium |

|

| Parkland College |

|

| Champaign-Urbana Astronomical Society Observatory |

We should be able to use them with our Digistar 6 in the dome when we are able to reopen. I like making content that can be used on different platforms

I used a DSLR with a fisheye lens on a tripod to get a good selection of overlapping images, making sure to have some shots with objects of interest centered.

I used Hugin to stitch the images. It’s not super automatic, but there are builtin tools for aligning and for masking out troublesome spots.

Finally, I use Gimp to fix up the nadir a bit and to get rid of the sky. I also fix up any small stitching errors that I missed earlier. Some distant power lines and light poles will end up cut in the process, but I can live with that.

It’s a messy process, but I use brightness and contrast settings and sometimes desaturation to get a nice mask. I work in smaller sections and then combine them.

It’s a messy process, but I use brightness and contrast settings and sometimes desaturation to get a nice mask. I work in smaller sections and then combine them.

And I usually have to over-mask the vegetation because I don’t have the patience to cut out around each leaf.

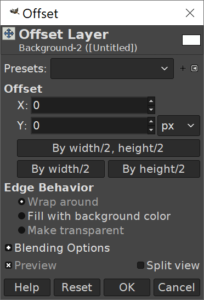

Don’t forget to check your edge seams. Layer > Transform > Offset and select “By width/2” with “wrap around” selected for Edge Behavior.

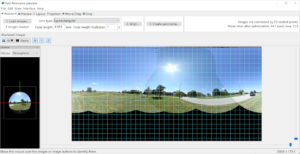

Vahana VR & VideoStitch Studio

Vahana VR & VideoStitch Studio: software to create immersive 360° VR video, live and in post-production.

I ran across this by accident today. I don’t yet have a dual camera setup to work with, but I want to be prepared. I ran across a discussion thread talking about the software and then the thread turned to sadness with a post that the company making it had folded. Then at the end was another post linking to another thread cheering the revival of the software as an open source project with the MIT License.

https://github.com/stitchEm/stitchEm

Honestly it has been a few years since I’ve built my own packages. Hopefully I can satisfy the dependencies through package managers at least. (If I had time to compile everything, I would still be running Gentoo. It ran mighty fast, but compiling time added up.)

If and when I manage to trying it out, I will be sure to update.